San Francisco, California —(Map)

Scientists have found a way to use brain signals to make a computer speak the words a person is trying to say. Their method could one day help people who have lost the ability to speak.

Some illnesses or injuries can cause people to lose the ability to speak. In many cases, a person’s brain may be fine, but they are unable to control the parts of their body used for speaking.

(Source: Z22/Björn Markmann. [CC BY 3.0], via Wikimedia Commons.)

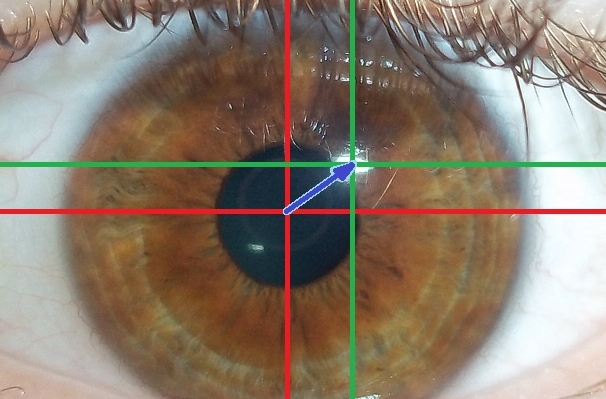

There are some ways for these people to communicate, but they are slow. One method allows a person to “type” by moving their eyes from letter to letter to spell out words. The top speed with this method is about 10 words per minute. Normal human speech is about 150 words per minute.

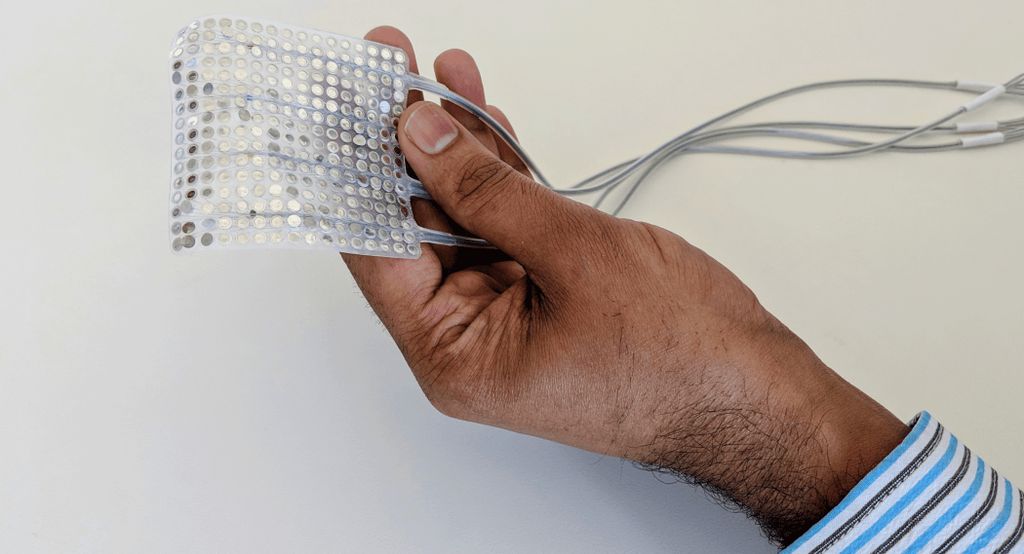

Much recent research has focused on a direct connection between someone’s brain and a computer. This is called a “Brain Computer Interface” (BCI). For many BCIs, people have wires attached to their brains. This allows scientists to track the electrical signals in the brain and connect them to computers. BCIs have led to some amazing discoveries, but they haven’t made communicating much faster.

(Source: UCSF.)

Scientists at the University of California, San Francisco (UCSF) decided to focus on the muscles people were trying to use when they spoke.

The UCSF scientists worked with a group of five people with epilepsy. Epilepsy is a condition where unusual electrical activity in the brain can cause problems with a person’s control of their body or senses. These people could speak normally, but already had temporary BCIs.

(Source: UCSF.)

The scientists recorded the brain signals of the people as they read hundreds of sentences like “Is this seesaw safe?” The collection of sentences included all the sounds used in English.

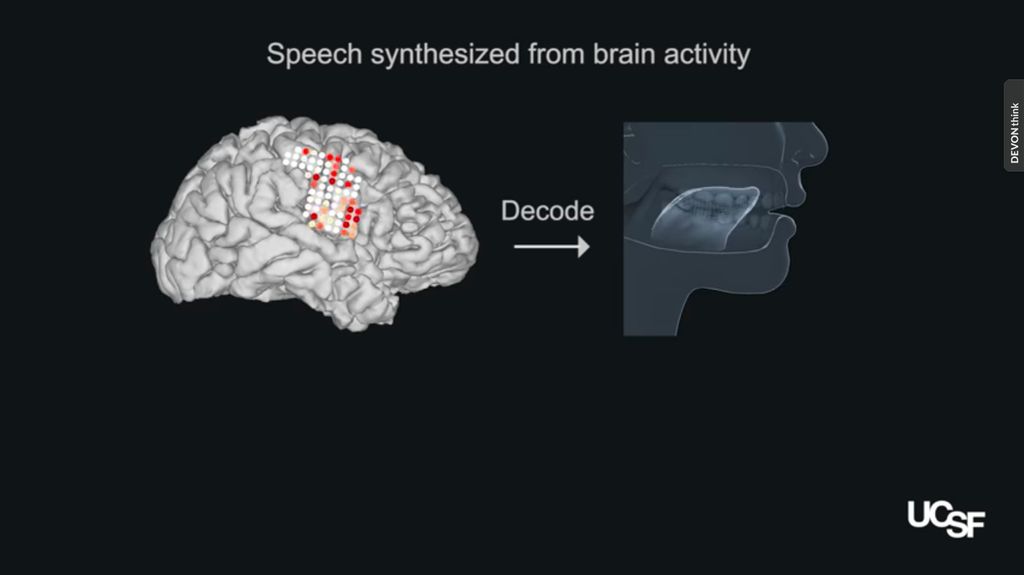

There are about 100 muscles in the tongue, lips, jaw, and throat that are used for speaking. The scientists knew roughly what the shape of the mouth would have to be to make each sound. This allowed them to figure out how the brain signals controlled the speaking muscles.

(Source: UCSF.)

With that information they could “decode” the brain signals to find out how the person was moving their mouth. Then the scientists were able to “synthesize” (create computer speech sounds), based on the position of the speaking muscles. Special computer programs helped in this process. The scientists were surprised at how close to real speech the synthesized speech was.

As a test, one person didn’t make sounds when he read the sentences. He just moved his mouth as if he were saying them. The system was still able to re-create the sounds he was trying to make, based on his brain patterns.

(Source: Steve Babuljak, UCSF.)

One important discovery is that though each person’s brain signals are different, the muscles used to make each sound are the same for everyone. That will make it easier for a system like this to help many people.

There’s much to learn before a system like this could be used in everyday life, but it’s still exciting progress.

😕

This map has not been loaded because of your cookie choices. To view the content, you can accept 'Non-necessary' cookies.