In 2019, Robert Julian-Borchak Williams was wrongly arrested for stealing five watches from a store. Though he didn’t do it, he was arrested after his face was “recognized” by a computer system. Now he’s making a complaint against the Detroit police.

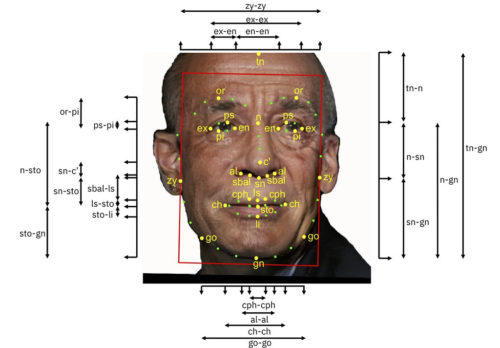

When a computer system identifies a person from their face, it’s called “facial recognition”. Facial recognition programs take measurements of important parts of the face and turn that information into a math formula describing the face. The programs then search for faces with similar formulas.

But facial recognition programs have a mixed record. The programs often recognize white men, but they’re not so good at identifying women and people with darker skin.

(Source: IBM Research, via Flickr.com.)

Still, many police departments use facial recognition. They believe it helps them do their jobs. In some cases, facial recognition has helped identify criminals.

But it’s not unusual for a system to create a “false match” – when the computer identifies a person, but it’s wrong. For years, experts have warned that false matches could make innocent people look like criminals.

😕

This image has not been loaded because of your cookie choices. To view the content, you can accept 'Non-necessary' cookies.

When a facial recognition program is wrong, it’s called a “false match”. False matches are more common with women and people with darker skin.

Mr. Williams’s arrest is the first known case of someone being wrongly arrested because of bad facial recognition.

A facial recognition program chose Mr. Williams as the closest match of an image taken from a security video. The person who sent the video to the police also chose Mr. Williams’s photo from a group of photos. But that person hadn’t been at the store during the robbery.

Mr. Williams was arrested, kept in jail overnight, and questioned. Mr. Williams said that when he was questioned, he held the picture from the security video near his face. He reports that one of the police officers then said, “the computer must have gotten it wrong.”

In spite of the mistake, Mr. Williams wasn’t released until hours later. Mr. Williams is now working with the ACLU (American Civil Liberties Union) to make a complaint against the Detroit police.

The ACLU is concerned because the police counted on facial recognition instead of police work. Before he was arrested, Mr. Williams wasn’t asked about where he was when the robbery happened or whether he owned clothes like those worn by the man shown in the security video.

Mr. Williams says that he wouldn’t have known why he was arrested if the police hadn’t mentioned the facial recognition “match”. He thinks there could be other innocent people who have also been wrongly arrested.

(Source: Marco Vanoli, via Flickr.com.)

Some local governments are taking facial recognition concerns seriously. San Francisco, Boston, and several cities in Massachusetts have banned the use of facial recognition.

Even some of the companies behind facial recognition are taking a step back. IBM has dropped its facial recognition program. Microsoft and Amazon are limiting how they sell the technology to police departments.

(Source: Brookings Institution, via Flickr.com.)

But most of the facial recognition tools used by police departments are made by less well-known companies which are still promoting the technology.

IBM, Microsoft, and Amazon have asked the US Congress to make laws that limit the use of facial recognition. Such laws may be the only way to keep innocent people from going to jail because of bad technology.